Tactile Narratives in Virtual Reality

- Supervisor: Dr. Oliver Schneider

- Institution: Haptic Experience Lab, University of Waterloo

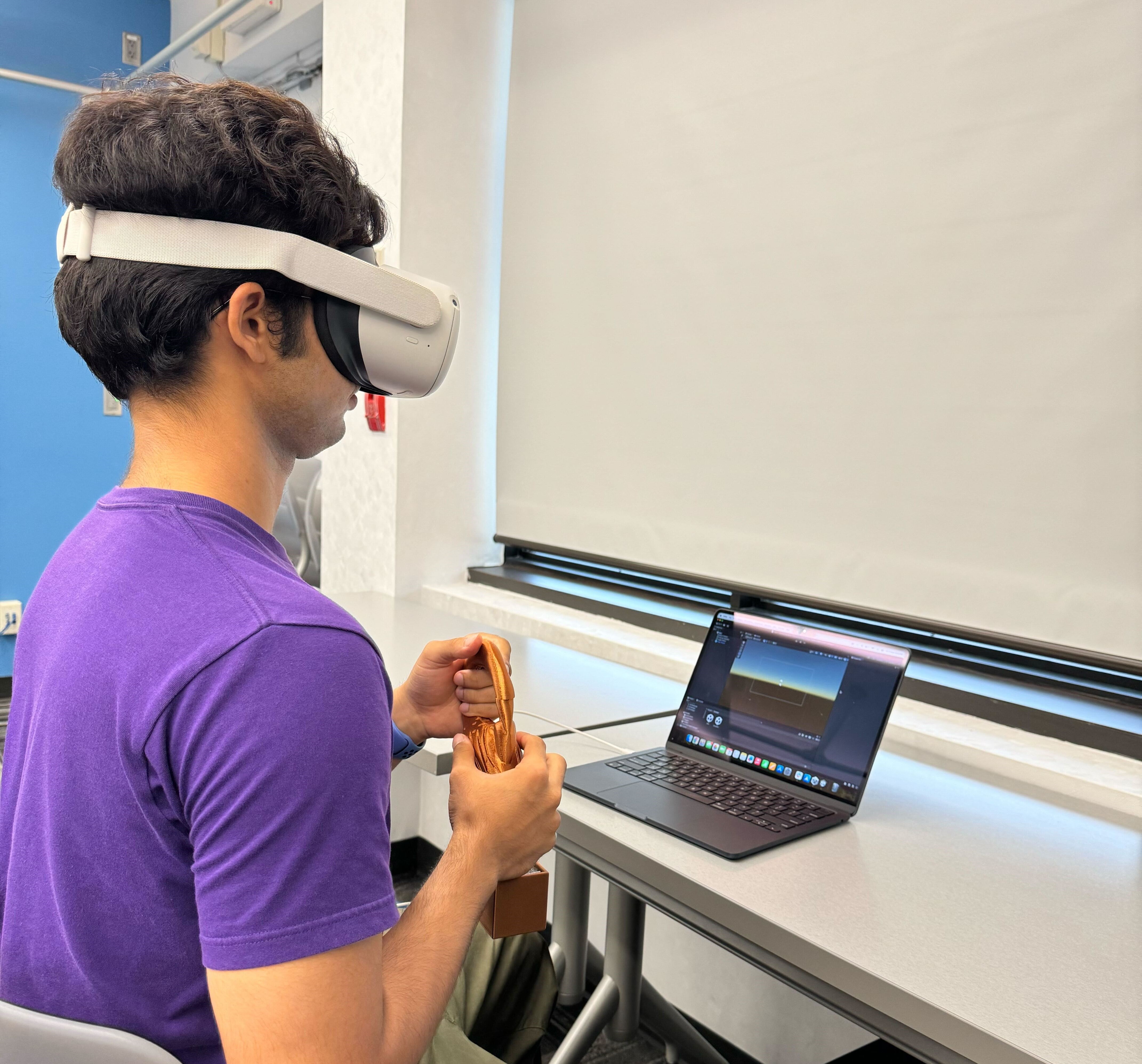

This research, which is also a part of my master's thesis, explores how to design haptic feedback systems in Virtual Reality (VR) environments to support relational presence, a concept that emphasizes engagement with values such as impression, witnessing, self-awareness, and affective dissonance. Unlike traditional VR that focuses on physical immersion, this project aims to develop haptic designs that enhance users' emotional and cognitive connections, fostering a more reflective and empathetic experience. The core objective is to align haptic feedback with emotional and narrative arcs, facilitating users’ engagement with challenging content, such as the historical injustices addressed by the Digital Oral Histories for Reconciliation (DOHR) initiative. In collaboration with the DOHR project, I developed a VR user interface in Unity that integrates advanced haptic feedback mechanisms. This interface allows users to experience the narratives of the Nova Scotia Home for Colored Children in a deeply engaging and reflective manner, fostering empathy and historical awareness.

Key Contributions

- Sankofa Interface Development: Designed and implemented the Sankofa Interface, a novel haptic device that translates audio cues into tactile sensations. The interface incorporates a microcontroller, vibration motor, and proximity sensors housed within a custom 3D-printed shell, which mimics the Sankofa bird—a symbol of historical awareness. The prototype construction involved selecting and optimizing materials (e.g., resin for detailed texture and durability) and embedding hardware components to ensure precise haptic feedback delivery aligned with the narrative.

- Haptic Feedback Design via Macaron: Leveraged and extended the capabilities of Macaron, an open-source haptic editor, to craft nuanced haptic feedback patterns that support relational presence. This involved developing custom vibration waveforms synchronized with the narrative's emotional tone, using Macaron’s enhanced features for waveform visualization, independent control of audio and haptic channels, and the ability to save and load custom haptic profiles. The haptic feedback design was informed by iterative user testing and feedback, focusing on creating a somatic connection between the user and the narrative.

- Software Integration in Unity: Integrated the Sankofa Interface into the VR environment using Unity, synchronizing the haptic feedback with visual and auditory elements to create a cohesive multisensory experience. This involved advanced Unity scripting to manage real-time data exchange between the microcontroller and the Unity environment, enabling precise control over user interactions and ensuring that haptic feedback reinforced key narrative moments.

- Audio-to-Vibration Mapping: Developed a custom solution to map audio signals to corresponding haptic feedback, creating a multisensory experience that deepens users' emotional engagement with the content. The mapping process included tuning the amplitude and frequency of the vibrations to match the narrative’s emotional intensity, ensuring that the haptic feedback was both subtle and impactful.

- Independent Control of Audio and Haptics: Overcame Unity’s default limitations by customizing the audio system to allow independent control over audio and haptic feedback. This customization involved advanced audio programming and routing techniques to manage multiple audio outputs, ensuring that the narrative audio remained clear while delivering precise haptic feedback through the Sankofa Interface.

Publication

In revision for a planned submission to CHI 2025

[Code will be provided upon request for review. The GitHub repository is currently private until published]

[Thesis PDF][Demo video will be provided upon request due to the sensitive nature of the content in the VR experience]